-

AWS solutions Architect Associate 시험 오답노트 4aws 2021. 3. 16. 23:12

1. io1

Provisioned IOPS SSD(io1) volume: I/O-intensive 작업에 최적화됨.particularly database workloads, that are sensitive to storage performance and consistency

2. two types of actions of S3 Lifecycle

1). Transition actions: 특정 기간 지나면 다른 클래스로 보내기

2). Expiration actions: 객체 지우기

3. KDS(Amazon Kinesis Data Streams)

- massively scalable and durable real-time data streaming service. <br>

- stream에 더해진 다음 24시간 동안만 데이터에 접근 가능하다(최대 7일로 늘릴 수 있음)

- max size of a data blob은 1MB. 각 shard는 1초에 1000PUT까지 가능

- 메세지에 partition key를 제공해서, 메세지 순서를 보장할 수 있다.- number of Shards는 manual로 설정해야 한다.

읽을거리

Amazon Kinesis Data Streams enables real-time processing of streaming big data. It provides ordering of records, as well as the ability to read and/or replay records in the same order to multiple Amazon Kinesis Applications. The Amazon Kinesis Client Library (KCL) delivers all records for a given partition key to the same record processor, making it easier to build multiple applications reading from the same Amazon Kinesis data stream (for example, to perform counting, aggregation, and filtering).

AWS recommends Amazon Kinesis Data Streams for use cases with requirements that are similar to the following:

- Routing related records to the same record processor (as in streaming MapReduce). For example, counting and aggregation are simpler when all records for a given key are routed to the same record processor.

- Ordering of records. For example, you want to transfer log data from the application host to the processing/archival host while maintaining the order of log statements.

- Ability for multiple applications to consume the same stream concurrently. For example, you have one application that updates a real-time dashboard and another that archives data to Amazon Redshift. You want both applications to consume data from the same stream concurrently and independently.

- Ability to consume records in the same order a few hours later. For example, you have a billing application and an audit application that runs a few hours behind the billing application. Because Amazon Kinesis Data Streams stores data for up to 7 days, you can run the audit application up to 7 days behind the billing application.

4. ALB with SNI, wildcard, SAN

- SNI를 사용하면 1개의 ALB에 여러개의 SSL 인증서를 연결할 수 있다. <br>

- wildcard certificates only work for related subdomains that match a simple pattern

- SAN certificates can support many different domains

5. Gateway Endpoint(instead of an Interface Endpoint)

- DynamoDB, S3

6. Redis auth(authentication)

- Redis authentication tokens enable Redis to require a token (password) before allowing clients to execute commands, thereby improving data security.

7. NAT agteway

- A NAT gateway supports 5 Gbps of bandwidth and automatically scales up to 45 Gbps.

- You can associate exactly one Elastic IP address with a NAT gateway.

- A NAT gateway supports the following protocols: TCP, UDP, and ICMP.

- You cannot associate a security group with a NAT gateway.

- You can use a network ACL to control the traffic to and from the subnet in which the NAT gateway is located.

- A NAT gateway can support up to 55,000 simultaneous connections to each unique destination.8. Amazon Neptune

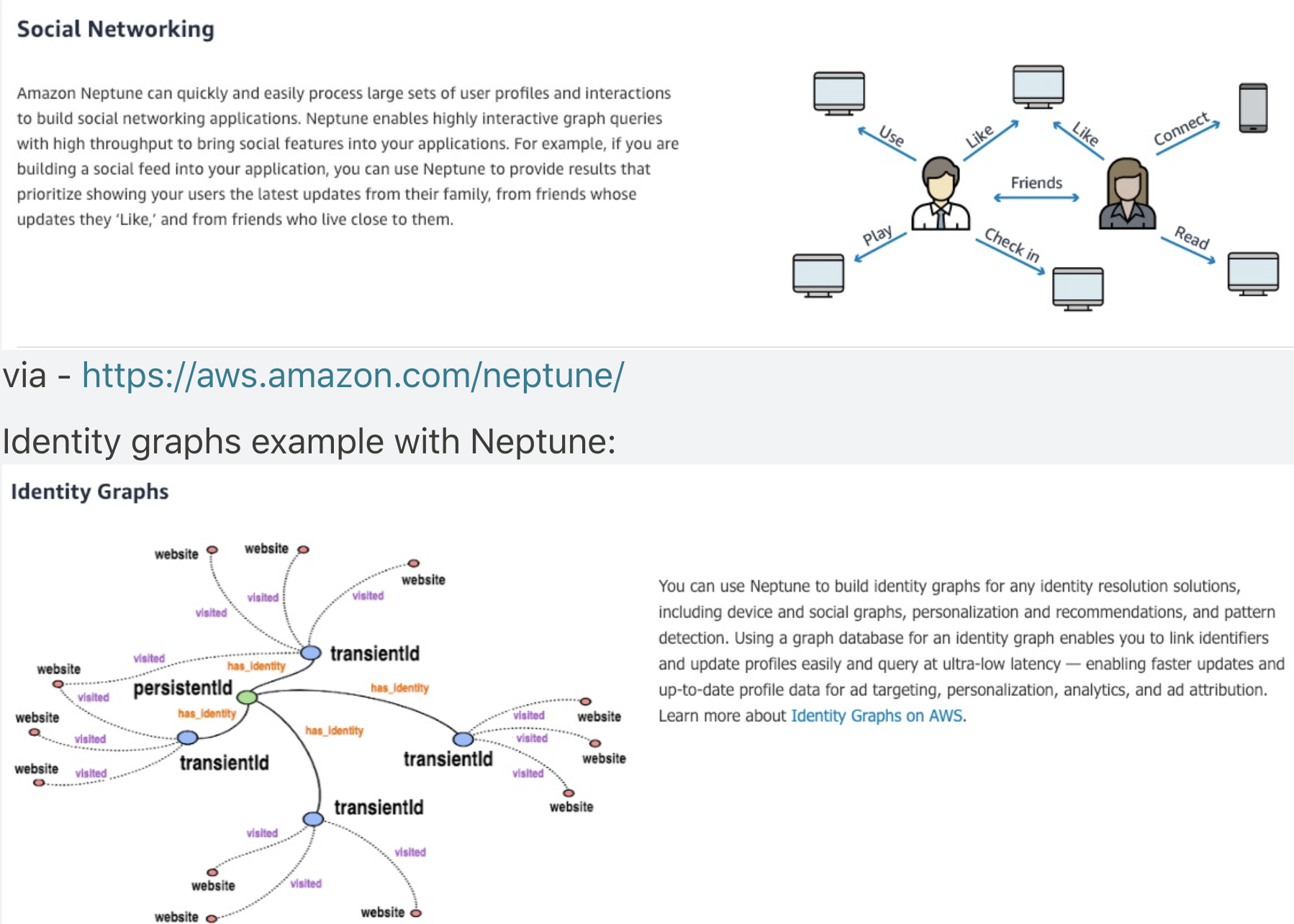

- Amazon Neptune is a fast, reliable, fully managed graph database service that makes it easy to build and run applications that work with highly connected datasets. The core of Amazon Neptune is a purpose-built, high-performance graph database engine optimized for storing billions of relationships and querying the graph with milliseconds latency. Neptune powers graph use cases such as recommendation engines, fraud detection, knowledge graphs, drug discovery, and network security.

Amazon Neptune is highly available, with read replicas, point-in-time recovery, continuous backup to Amazon S3, and replication across Availability Zones. Neptune is secure with support for HTTPS encrypted client connections and encryption at rest. Neptune is fully managed, so you no longer need to worry about database management tasks such as hardware provisioning, software patching, setup, configuration, or backups.

Amazon Neptune can quickly and easily process large sets of user-profiles and interactions to build social networking applications. Neptune enables highly interactive graph queries with high throughput to bring social features into your applications. For example, if you are building a social feed into your application, you can use Neptune to provide results that prioritize showing your users the latest updates from their family, from friends whose updates they ‘Like,’ and from friends who live close to them.

9. AWS AppSync

AWS AppSync simplifies application development by letting you create a flexible API to securely access, manipulate, and combine data from one or more data sources. AppSync is a managed service that uses GraphQL to make it easy for applications to get exactly the data they need. With AppSync, you can build scalable applications, including those requiring real-time updates, on a range of data sources such as NoSQL data stores, relational databases, HTTP APIs, and your custom data sources with AWS Lambda.

10. AWS Single Sign-On

AWS Single Sign-On (SSO) makes it easy to centrally manage access to multiple AWS accounts and business applications and provide users with single sign-on access to all their assigned accounts and applications from one place. With AWS SSO, you can easily manage SSO access and user permissions to all of your accounts in AWS Organizations centrally. SSO configures and maintains all the necessary permissions for your accounts automatically, without requiring any additional setup in the individual accounts.

11. CloudFront에는 IAM role을 attach 할 수 없다.

12. cloudfront를 통해서만 s3에 접근할 수 있게 설정하려면?

To restrict access to content that you serve from Amazon S3 buckets, you need to follow the following steps:

- Create a special CloudFront user called an origin access identity (OAI) and associate it with your distribution.

- Configure your S3 bucket permissions so that CloudFront can use the OAI to access the files in your bucket and serve them to your users. Make sure that users can’t use a direct URL to the S3 bucket to access a file there.

After you take these steps, users can only access your files through CloudFront, not directly from the S3 bucket.

In general, if you’re using an Amazon S3 bucket as the origin for a CloudFront distribution, you can either allow everyone to have access to the files there, or you can restrict access. If you restrict access by using, for example, CloudFront signed URLs or signed cookies, you also won’t want people to be able to view files by simply using the direct Amazon S3 URL for the file. Instead, you want them to only access the files by using the CloudFront URL, so your content remains protected.

13. SSE-C, SSE-KMS, SSE-S3, Client-Side Encryption

SSE-C : With Server-Side Encryption with Customer-Provided Keys (SSE-C), you manage the encryption keys and Amazon S3 manages the encryption

SSE-KMS - AWS Key Management Service (AWS KMS) is a service that combines secure, highly available hardware and software to provide a key management system scaled for the cloud. When you use server-side encryption with AWS KMS (SSE-KMS), you can specify a customer-managed CMK that you have already created. But, you never get to know the actual key here.

SSE-S3 - When you use Server-Side Encryption with Amazon S3-Managed Keys (SSE-S3), each object is encrypted with a unique key. However, this option does not provide the ability to audit trail the usage of the encryption keys.

Client-Side Encryption - Client-side encryption is the act of encrypting data before sending it to Amazon S3. To enable client-side encryption, you have the following options: Use a customer master key (CMK) stored in AWS Key Management Service (AWS KMS), Use a master key you store within your application. Since the customer wants to use AWS provided facility, this is not an option.

14. Application Load Balancer + dynamic port mapping

Application Load Balancer can automatically distribute incoming application traffic across multiple targets, such as Amazon EC2 instances, containers, IP addresses, and Lambda functions. It can handle the varying load of your application traffic in a single Availability Zone or across multiple Availability Zones.

Dynamic port mapping with an Application Load Balancer makes it easier to run multiple tasks on the same Amazon ECS service on an Amazon ECS cluster.

15. Warm Standby, Backup and Restore, Pilot Light, Multi Site

Warm Standby - The term warm standby is used to describe a DR scenario in which a scaled-down version of a fully functional environment is always running in the cloud. A warm standby solution extends the pilot light elements and preparation. It further decreases the recovery time because some services are always running. By identifying your business-critical systems, you can fully duplicate these systems on AWS and have them always on.

Incorrect options:

Backup and Restore - In most traditional environments, data is backed up to tape and sent off-site regularly. If you use this method, it can take a long time to restore your system in the event of a disruption or disaster. Amazon S3 is an ideal destination for backup data that might be needed quickly to perform a restore. Transferring data to and from Amazon S3 is typically done through the network, and is therefore accessible from any location. Many commercial and open-source backup solutions integrate with Amazon S3.

Pilot Light - The term pilot light is often used to describe a DR scenario in which a minimal version of an environment is always running in the cloud. The idea of the pilot light is an analogy that comes from the gas heater. In a gas heater, a small flame that’s always on can quickly ignite the entire furnace to heat up a house. This scenario is similar to a backup-and-restore scenario. For example, with AWS you can maintain a pilot light by configuring and running the most critical core elements of your system in AWS. When the time comes for recovery, you can rapidly provision a full-scale production environment around the critical core.

Multi Site - A multi-site solution runs in AWS as well as on your existing on-site infrastructure, in an active-active configuration. The data replication method that you employ will be determined by the recovery point that you choose.

16. EMR

create a daily big data analysis job leveraging Spark for analyzing online/offline sales and customer loyalty data to create customized reports on a client-by-client basis. The big data analysis job needs to read the data from Amazon S3 and output it back to S3. Finally, the results need to be sent back to the firm's clients.

Which technology do you recommend to run the Big Data analysis job?

이 질문에 대한 답은 EMR:EMR is the industry-leading cloud big data platform for processing vast amounts of data using open source tools such as Apache Spark, Apache Hive, Apache HBase, Apache Flink, Apache Hudi, and Presto. With EMR you can run Petabyte-scale analysis at less than half of the cost of traditional on-premises solutions and over 3x faster than standard Apache Spark. For short-running jobs, you can spin up and spin down clusters and pay per second for the instances used. For long-running workloads, you can create highly available clusters that automatically scale to meet demand. Amazon EMR uses Hadoop, an open-source framework, to distribute your data and processing across a resizable cluster of Amazon EC2 instances.

17. Redshift

Amazon Redshift is a fully-managed petabyte-scale cloud-based data warehouse product designed for large scale data set storage and analysis.

18. Amazon MQ

- Amazon MQ is a managed message broker service for Apache ActiveMQ that makes it easy to set up and operate message brokers in the cloud.

- it uses industry-standard APIs and protocols for messaging, including JMS, NMS, AMQP, STOMP, MQTT, and WebSocket.

- 기존에 쓰던 메세지 브로커 aws로 이전할 때 MQ 쓰면 된다.

19. Amazon Kinesis Data Streams

Amazon Kinesis Data Streams (KDS) is a massively scalable and durable real-time data streaming service. KDS can continuously capture gigabytes of data per second from hundreds of thousands of sources such as website clickstreams, database event streams, financial transactions, social media feeds, IT logs, and location-tracking events.

20. EBS volumes mounted in RAID 0, RAID1

With Amazon EBS, you can use any of the standard RAID configurations that you can use with a traditional bare metal server, as long as that particular RAID configuration is supported by the operating system for your instance. This is because all RAID is accomplished at the software level. For greater I/O performance than you can achieve with a single volume, RAID 0 can stripe multiple volumes together; for on-instance redundancy, RAID 1 can mirror two volumes together.

EBS volumes (irrespective of the RAID types) are local disks and cannot be shared across instances.

21. Amazon Kinesis with Amazon Simple Notification Service (SNS)

Amazon Kinesis makes it easy to collect, process, and analyze real-time, streaming data so you can get timely insights and react quickly to new information. Amazon Kinesis offers key capabilities to cost-effectively process streaming data at any scale, along with the flexibility to choose the tools that best suit the requirements of your application.

With Amazon Kinesis, you can ingest real-time data such as video, audio, application logs, website clickstreams, and IoT telemetry data for machine learning, analytics, and other applications. Amazon Kinesis enables you to process and analyze data as it arrives and respond instantly instead of having to wait until all your data is collected before the processing can begin.

Kinesis will be great for event streaming from the IoT devices, but not for sending notifications as it doesn't have such a feature.

Amazon Simple Notification Service (SNS) is a highly available, durable, secure, fully managed pub/sub messaging service that enables you to decouple microservices, distributed systems, and serverless applications. Amazon SNS provides topics for high-throughput, push-based, many-to-many messaging. SNS is a notification service and will be perfect for this use case.

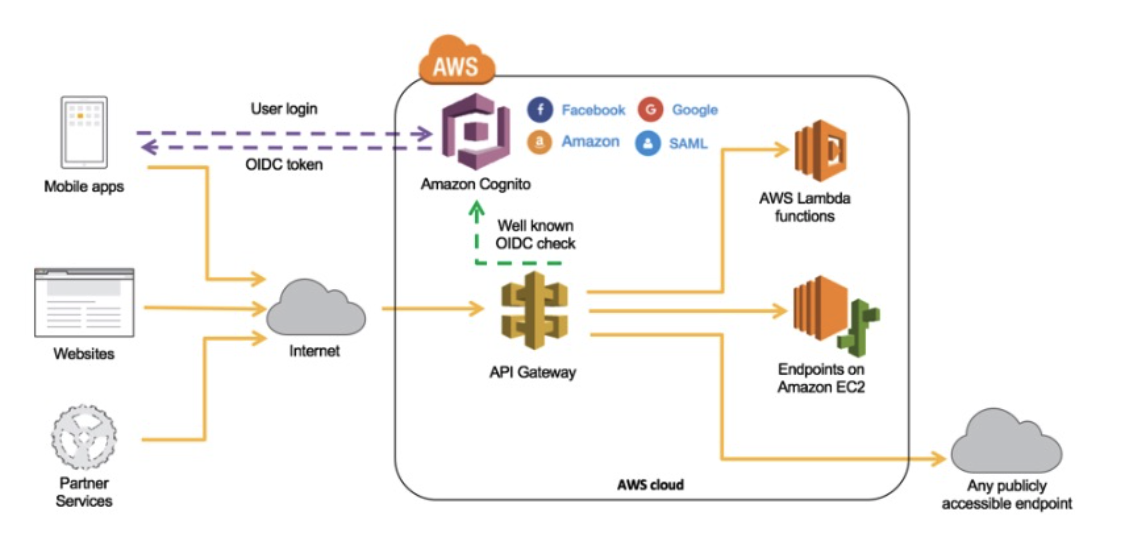

22. Amazon Cognito

Amazon Cognito lets you add user sign-up, sign-in, and access control to your web and mobile apps quickly and easily. Amazon Cognito scales to millions of users and supports sign-in with social identity providers, such as Facebook, Google, and Amazon, and enterprise identity providers via SAML 2.0. Here Cognito is the best technology choice for managing mobile user accounts.

23. on-premise - aws connect: Direct Connect as a primary connection, Site to Site VPN as a backup connection

Direct Connect는 private으로 연결, Site to Site VPN은 internet으로 연결.

Use Direct Connect as a primary connection

- AWS Direct Connect lets you establish a dedicated network connection between your network and one of the AWS Direct Connect locations. Using industry-standard 802.1q VLANs, this dedicated connection can be partitioned into multiple virtual interfaces. AWS Direct Connect does not involve the Internet; instead, it uses dedicated, private network connections between your intranet and Amazon VPC.

Use Site to Site VPN as a backup connection

- AWS Site-to-Site VPN enables you to securely connect your on-premises network or branch office site to your Amazon Virtual Private Cloud (Amazon VPC). You can securely extend your data center or branch office network to the cloud with an AWS Site-to-Site VPN connection. A VPC VPN Connection utilizes IPSec to establish encrypted network connectivity between your intranet and Amazon VPC over the Internet. VPN Connections can be configured in minutes and are a good solution if you have an immediate need, have low to modest bandwidth requirements, and can tolerate the inherent variability in Internet-based connectivity.

Direct Connect as a primary connection guarantees great performance and security (as the connection is private). Using Direct Connect as a backup solution would work but probably carries a risk it would fail as well. Therefore as we don't mind going over the public internet (which is reliable, but less secure as connections are going over the public route), we should use a Site to Site VPN as a backup connection.

24. To set up Amazon SES event notifications to an Amazon Kinesis data stream in another account, do the following:

- Start at the SES source. Update your configuration settings in SES to push to an Amazon Simple Notification Service (Amazon SNS) topic. The updated setting configures AWS Lambda to subscribe to the topic as a trigger.

- Subscribe to SNS.

- Set up cross-account access.

- Set up Lambda to send data to Amazon Kinesis Data Streams. Lambda then uses the code to grab the full event from the Amazon SNS topic. Lambda also assumes a role in another account to put the records into the data stream.

- Use the Amazon Kinesis Client Library (KCL) to process records in the stream.

- When Lambda is directly configured with SES, Amazon SES sends an event record to Lambda every time it receives an incoming message. However, it omits the body of the message.

- AWS Lambda can write across accounts, and SNS topic cannot subscribe to another SNS topic.

- AWS does not suggest using SES across accounts. However, there are certain conditions under which, it is allowed. Also, SES cannot directly write to Kinesis Data Streams

25. Multi-AZ

- disaster recovery strategy로 좋음.

- minimal downtime and data loss while ensuring top application performance.

- read-replicas는 읽기 성능 위해 쓰는 것이지, complete fault-tolerant solution을 위해 쓰이진 않는다.

26. from S3 Standard to S3 One Zone-IA

- s3 standard에서 S3 One Zone-IA 혹은 S3 Standard-IA로 object를 옮기기 위해선 minimum 30일 동안 s3 standard에 먼저 저장해야만 한다.

27. Amazon Aurora Global Database

: 전 세계에 분산된 앱을 처리하기 위해 사용.

- 하나의 db가 여러 region에 걸쳐 서비스됨.

- db성능에 영향 주지 않으면서 데이터를 여러 지역으로 replicate 해서 각 지역에서 좋은 성능을 가능케 한다.

- disaster recovery기능까지.

28. 복수개의 scale-in(out) policy를 설정했을 때.

- 한 조건은 특정 상황에 2개의 인스턴스를, 다른 한 조건은 한 개의 인스턴스를 늘린다. 만약 두 조건이 동시에 충족되는 상황이라면 이 중 더 큰 capacity를 충족하는 asg policy가 실행된다(2개 인스턴스 실행).

- 서로 상충되는 policy를 만들어놓으면 위험하다. 특정 상황에서 어떤 조건은 scale-in을, 다른 조건은 scale-out을 한다면, 서버가 줄어들었다가 바로 다시 늘어나는 현상이 생길 수도 있다.

29. Transit gateway

- VPC들과 on-premises networks들을 하나의 게이트웨이로 연결시켜준다.(control hub)

- 관리에 더 효율적, 비용도 절감됨.

- Transit Gateway provides several advantages over Transit VPC: 1. Transit Gateway abstracts away the complexity of maintaining VPN connections with hundreds of VPCs. 2. Transit Gateway removes the need to manage and scale EC2 based software appliances. AWS is responsible for managing all resources needed to route traffic. 3. Transit Gateway removes the need to manage high availability by providing a highly available and redundant Multi-AZ infrastructure. 4. Transit Gateway improves bandwidth for inter-VPC communication to burst speeds of 50 Gbps per AZ. 5. Transit Gateway streamlines user costs to a simple per hour per/GB transferred model. 6. Transit Gateway decreases latency by removing EC2 proxies and the need for VPN encapsulation.

30. VPC peering

- 두 vpc를 연결하는 가장 쉬운 방법.

- 서로 다른 계정, 다른 region 간 peering 가능.

- peering 통해 넘어 다니는 트래픽에 대해서만 요금 부과

- ipv4, ipv6둘 다 가능.

31. AWS Cost Explorer, AWS Compute Optimizer, S3 Analytics Storage Class analysis, AWS Trusted Advisor

AWS Cost Explorer: ec2인스턴스 중 사용량이 낮아서 downsize해도 되는 것이 있다면 알려줌.

AWS Compute Optimizer: 머신러닝으로 내 utilization metrics를 돌려서 가격을 절약하고 성능을 향상할 수 있는 ec2 instance타입 추천해줌. Compute Optimizer helps you choose the optimal Amazon EC2 instance types, including those that are part of an Amazon EC2 Auto Scaling group, based on your utilization data.

S3 Analytics Storage Class analysis: storage access pattern을 분석해서 언제 어떤 storage class로 데이터 옮길지 도와줌.

AWS Trusted Advisor: 30일 내에 만료되거나 만료된 지 30일 된 Reserved Instance를 확인해준다.

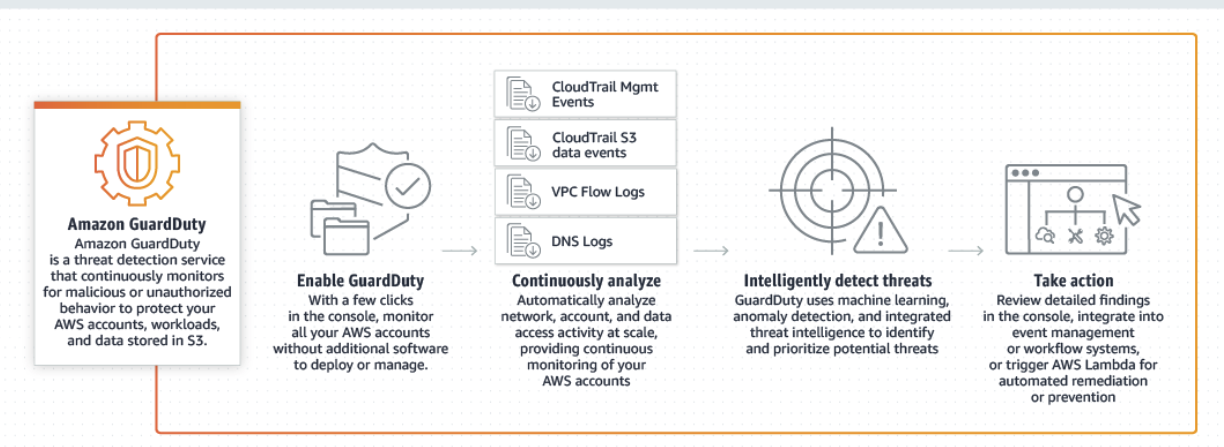

32. AWS Gaurd Duty

- 위험 감지 서비스. 의심스럽거나 미허가 행동을 감지. aws account, workloads, data stored in S3가 대상.

- 머신러닝 통해 분석

- VPC Flow Logs, DNS logs, CloudTrail events를 가지고 분석한다.

33. route 53 active-passive failover

when you want a primary resource or group of resources to be available the majority of the time and you want a secondary resource or group of resources to be on standby in case all the primary resources become unavailable. When responding to queries, Route 53 includes only healthy primary resources. If all the primary resources are unhealthy, Route 53 begins to include only the healthy secondary resources in response to DNS queries.

34. route 53 Latency-based routing

: 여러 region에 서버가 존재할 때, request가오는 지역을 기반으로 가장 빠른 곳으로 트래픽을 보내준다.

예를 들어, 미국, 한국에 서버가 있을 때, 한국, 일본 유저는 한국 서버로, 미국, 캐나다 유저는 미국으로 보내진다.35. Route 53 health check

Route 53 DNS Failover handles all of these failure scenarios by integrating with ELB behind the scenes. Once enabled, Route 53 automatically configures and manages health checks for individual ELB nodes. Route 53 also takes advantage of the EC2 instance health checking that ELB performs (information on configuring your ELB health checks is available here). By combining the results of health checks of your EC2 instances and your ELBs, Route 53 DNS Failover can evaluate the health of the load balancer and the health of the application running on the EC2 instances behind it. In other words, if any part of the stack goes down, Route 53 detects the failure and routes traffic away from the failed endpoint.

Using Route 53 DNS Failover, you can run your primary application simultaneously in multiple AWS regions around the world and failover across regions. Your end-users will be routed to the closest (by latency), healthy region for your application. Route 53 automatically removes from service any region where your application is unavailable - it will pull an endpoint out of service if there is region-wide connectivity or operational issue, if your application goes down in that region, or if your ELB or EC2 instances go down in that region.

36. Amazon FSx for Windows File Server

Amazon FSx for Windows File Server provides fully managed, highly reliable, and scalable file storage that is accessible over the industry-standard Server Message Block (SMB) protocol. It is built on Windows Server, delivering a wide range of administrative features such as user quotas, end-user file restore, and Microsoft Active Directory (AD) integration. It offers single-AZ and multi-AZ deployment options, fully managed backups, and encryption of data at rest and in transit. You can optimize cost and performance for your workload needs with SSD and HDD storage options; and you can scale storage and change the throughput performance of your file system at any time.

With Amazon FSx, you get highly available and durable file storage starting from $0.013 per GB-month. Data deduplication enables you to optimize costs even further by removing redundant data. You can increase your file system storage and scale throughput capacity at any time, making it easy to respond to changing business needs. There are no upfront costs or licensing fees.

37. Amazon FSx for Lustre

Amazon FSx for Lustre is a fully managed service that provides cost-effective, high-performance storage for compute workloads. Many workloads such as machine learning, high performance computing (HPC), video rendering, and financial simulations depend on compute instances accessing the same set of data through high-performance shared storage. Lustre is Linux based.

38. WAF

-waf는 cloudfront, ALB, API Gateway에만 사용 가능하다.

When you use AWS WAF with CloudFront, you can protect your applications running on any HTTP webserver, whether it's a webserver that's running in Amazon Elastic Compute Cloud (Amazon EC2) or a web server that you manage privately. You can also configure CloudFront to require HTTPS between CloudFront and your own webserver, as well as between viewers and CloudFront.

AWS WAF is tightly integrated with Amazon CloudFront and the Application Load Balancer (ALB), services that AWS customers commonly use to deliver content for their websites and applications. When you use AWS WAF on Amazon CloudFront, your rules run in all AWS Edge Locations, located around the world close to your end-users. This means security doesn’t come at the expense of performance. Blocked requests are stopped before they reach your web servers. When you use AWS WAF on Application Load Balancer, your rules run in the region and can be used to protect internet-facing as well as internal load balancers.

39. Amazon EventBridge

- serverless event bus that makes it easy to connect applications and is event-based, works asynchronously to decouple the system architecture

- recommended when you want to build an application that reacts to events from SaaS applications and/or AWS services.

- the only event-based service that integrates directly with third-party SaaS partners

- Amazon EventBridge also automatically ingests events from over 90 AWS services without requiring developers to create any resources in their account. Further, Amazon EventBridge uses a defined JSON-based structure for events and allows you to create rules that are applied across the entire event body to select events to forward to a target. Amazon EventBridge currently supports over 15 AWS services as targets, including AWS Lambda, Amazon SQS, Amazon SNS, and Amazon Kinesis Streams and Firehose, among others. At launch, Amazon EventBridge is has limited throughput (see Service Limits) which can be increased upon request, and typical latency of around half a second.

40. Cognito User Pools

- 회원 관리해주는 기능

A user pool is a user directory in Amazon Cognito.

You can leverage Amazon Cognito User Pools to either provide built-in user management or integrate with external identity providers, such as Facebook, Twitter, Google+, and Amazon. Whether your users sign-in directly or through a third party, all members of the user pool have a directory profile that you can access through a Software Development Kit (SDK).

User pools provide: 1. Sign-up and sign-in services. 2. A built-in, customizable web UI to sign in users. 3. Social sign-in with Facebook, Google, Login with Amazon, and Sign in with Apple, as well as sign-in with SAML identity providers from your user pool. 4. User directory management and user profiles. 5. Security features such as multi-factor authentication (MFA), checks for compromised credentials, account takeover protection, and phone and email verification. 6. Customized workflows and user migration through AWS Lambda triggers.

After creating an Amazon Cognito user pool, in API Gateway, you must then create a COGNITO_USER_POOLS authorizer that uses the user pool.

41. RDS, EBS, s3를 다른 region에서도 사용하려면?

RDS: Read Replicas를 다른 region에 두면 된다.

EBS: initiate and ensure the EBS Snapshots of my data volumes are configured for cross-region copy

S3: enable Cross-Region Replication(CRR)

42. SNS, SQS

SNS는 publish-dubscribe(pub-sub) 형태로, 메시지가 client에게 'push' 된다.

이 메커니즘은 메시지를 받을 상대 application이 존재한다고 가정한다.

반면 SQS는 polling형태로, client가 자기가 원할 때 메세지를 poll 한다.

이게 중요한 차이다.

참고로 KDS(Kinesis Data Stream)도 pub-sub 형태다.

event-bridge 또한 이벤트가 도착하자마자 바로 처리돼야 한다.

43. ASG

- min, max capacity는 필수 지정, desired capacity는 선택 지정.

- 서비스가 매우 critical해서 반드시 ec2 2대는 항상 돌아가야 하는 상황이라면,

min을 2개가 아니라 4로 설정하고, az 2개에 각각 2대씩 런칭해야 한다.

한 az가 다운될 수도 있기 때문이다.

44. Transit VPC

: Transit VPC uses customer-managed Amazon Elastic Compute Cloud (Amazon EC2) VPN instances in a dedicated transit VPC with an Internet gateway. This design requires the customer to deploy, configure, and manage EC2-based VPN appliances, which will result in additional EC2, and potentially third-party product and licensing charges. Note that this design will generate additional data transfer charges for traffic traversing the transit VPC: data is charged when it is sent from a spoke VPC to the transit VPC, and again from the transit VPC to the on-premises network or a different AWS Region. Transit VPC is not the right choice here.

44. VPC endpoint

A VPC endpoint allows you to privately connect your VPC to supported AWS services without requiring an Internet gateway, NAT device, VPN connection, or AWS Direct Connect connection. Endpoints are virtual devices that are horizontally scaled, redundant, and highly available VPC components. They allow communication between instances in your VPC and services without imposing availability risks or bandwidth constraints on your network traffic.

VPC endpoints enable you to reduce data transfer charges resulting from network communication between private VPC resources (such as Amazon Elastic Cloud Compute—or EC2—instances) and AWS Services (such as Amazon Quantum Ledger Database, or QLDB). Without VPC endpoints configured, communications that originate from within a VPC destined for public AWS services must egress AWS to the public Internet in order to access AWS services. This network path incurs outbound data transfer charges. Data transfer charges for traffic egressing from Amazon EC2 to the Internet vary based on volume. With VPC endpoints configured, communication between your VPC and the associated AWS service does not leave the Amazon network. If your workload requires you to transfer significant volumes of data between your VPC and AWS, you can reduce costs by leveraging VPC endpoints.

In larger multi-account AWS environments, network design can vary considerably. Consider an organization that has built a hub-and-spoke network with AWS Transit Gateway. VPCs have been provisioned into multiple AWS accounts, perhaps to facilitate network isolation or to enable delegated network administration. When deploying distributed architectures such as this, a popular approach is to build a "shared services VPC, which provides access to services required by workloads in each of the VPCs. This might include directory services or VPC endpoints. Sharing resources from a central location instead of building them in each VPC may reduce administrative overhead and cost.

45. user data

- user data는 인스턴스 런칭 시에만 실행되는 것이 디폴트 설정이다.

- instance re-start 때에도 실행되게 하려면 설정을 바꿔야 한다.

- user data는 root 권한으로 실행되기 때문에 sudo 명령어를 치지 않아도 된다.

46. GuardDuty

- Disable하면 현재까지 쌓인 정보가 모두 지워진다.

- Suspend하면 서비스는 멈추지만 현재까지 쌓인 정보는 유지된다.

47. DNS private hosted zones

- 이걸 하기 위해선 DNS hostnames와 DNS resolution이 먼저 설정되어야만 한다.

48. Access Advisor

- 계정 유저가 지난 몇 개월간 어떤 권한(iam role)을 사용했는지 확인할 수 있다.

49. EBS volume

EBS volume은 az locked다.

50. Cognito Authentication via Cognito User Pools

- 앱 내 비지니스 로직에만 집중하기 위해 유저 인증 과정을 앱에서 분리하고 싶다면 이 서비스를 사용하면 된다.

51. dedicated instance, dedicated Host

Dedicated Instances - Dedicated Instances are Amazon EC2 instances that run in a virtual private cloud (VPC) on hardware that's dedicated to a single customer. Dedicated Instances that belong to different AWS accounts are physically isolated at a hardware level, even if those accounts are linked to a single-payer account. However, Dedicated Instances may share hardware with other instances from the same AWS account that are not Dedicated Instances.

A Dedicated Host is also a physical server that's dedicated for your use. With a Dedicated Host, you have visibility and control over how instances are placed on the server.

52. SNS 토픽 접근 권한 부여

- Add a policy to an IAM user or group. The simplest way to give users permissions to topics or queues is to create a group and add the appropriate policy to the group and then add users to that group. It's much easier to add and remove users from a group than to keep track of which policies you set on individual users.

- Add a policy to a topic or queue. If you want to give permissions to a topic or queue to another AWS account, the only way you can do that is by adding a policy that has as its principal the AWS account you want to give permissions to.

대부분의 경우 1을 사용하면 되지만, 타 계정에서 내 SNS토픽에 접근하게 해주려면 2를 써야한다.

예제는 아래와 같다.

53. X-Ray

AWS X-Ray helps developers analyze and debug production, distributed applications, such as those built using a microservices architecture. With X-Ray, you can understand how your application and its underlying services are performing to identify and troubleshoot the root cause of performance issues and errors. X-Ray provides an end-to-end view of requests as they travel through your application, and shows a map of your application’s underlying components.

You can use X-Ray to collect data across AWS Accounts. The X-Ray agent can assume a role to publish data into an account different from the one in which it is running. This enables you to publish data from various components of your application into a central account.

활용 예시:

The engineering team at the company would like to debug and trace data across these AWS accounts and visualize it in a centralized account.

54 . WAF

AWS WAF is a web application firewall service that lets you monitor web requests and protect your web applications from malicious requests. Use AWS WAF to block or allow requests based on conditions that you specify, such as the IP addresses. You can also use AWS WAF preconfigured protections to block common attacks like SQL injection or cross-site scripting.

Configure AWS WAF on the Application Load Balancer in a VPC

You can use AWS WAF with your Application Load Balancer to allow or block requests based on the rules in a web access control list (web ACL). Geographic (Geo) Match Conditions in AWS WAF allows you to use AWS WAF to restrict application access based on the geographic location of your viewers. With geo match conditions you can choose the countries from which AWS WAF should allow access.

Geo match conditions are important for many customers. For example, legal and licensing requirements restrict some customers from delivering their applications outside certain countries. These customers can configure a whitelist that allows only viewers in those countries. Other customers need to prevent the downloading of their encrypted software by users in certain countries. These customers can configure a blacklist so that end-users from those countries are blocked from downloading their software.

55. s3 object 소유권

- 내 버킷에 다른 계정이 object업로드 하면, 해당 object소유권은 내가 아닌 다른 계정에 있다.(내가 접근 불가).

접근하기 위해선 policy 수정 작업 해줘야 함.

By default, an S3 object is owned by the AWS account that uploaded it. This is true even when the bucket is owned by another account. Because the Amazon Redshift data files from the UNLOAD command were put into your bucket by another account, you (the bucket owner) don't have default permission to access those files.

56. CloudFront, S3 Transfer Acceleration

전송 속도를 빠르게 해야 하는 이슈가 있을 때

- CloudFront는 object크기가 1GB이하인 경우 유리하다. 이보다 크다면 Transfer Acceleration이 더 낫다.

57. RDS IAM Authentication

- IAM Authentication으로 AWS RDS에 접근 가능한 db는 Mysql, PostGreSQL 뿐이다. (Aurora는 당연히 될듯)

58. Amazon Transcribe

- audio 파일을 text파일로 변환시켜준다.

59. Global Accelerator

Global Accelerator is a networking service that sends your user’s traffic through Amazon Web Service’s global network infrastructure, improving your internet user performance by up to 60%. When the internet is congested, Global Accelerator’s automatic routing optimizations will help keep your packet loss, jitter, and latency consistently low.

With Global Accelerator, you are provided two global static customer-facing IPs to simplify traffic management. On the back end, add or remove your AWS application origins, such as Network Load Balancers, Application Load Balancers, Elastic IPs, and EC2 Instances, without making user-facing changes. To mitigate endpoint failure, Global Accelerator automatically re-routes your traffic to your nearest healthy available endpoint.

60. ALB

An Application Load Balancer cannot be assigned an Elastic IP address (static IP address).

ALB에 elastic IP를 부착할 수 없다!

61. Dynomo DB encryption

By default, all DynamoDB tables are encrypted under an AWS owned customer master key (CMK), which do not write to CloudTrail logs - AWS owned CMKs are a collection of CMKs that an AWS service owns and manages for use in multiple AWS accounts. Although AWS owned CMKs are not in your AWS account, an AWS service can use its AWS owned CMKs to protect the resources in your account.

You do not need to create or manage the AWS owned CMKs. However, you cannot view, use, track, or audit them. You are not charged a monthly fee or usage fee for AWS owned CMKs and they do not count against the AWS KMS quotas for your account.

The key rotation strategy for an AWS owned CMK is determined by the AWS service that creates and manages the CMK.

All DynamoDB tables are encrypted. There is no option to enable or disable encryption for new or existing tables. By default, all tables are encrypted under an AWS owned customer master key (CMK) in the DynamoDB service account. However, you can select an option to encrypt some or all of your tables under a customer-managed CMK or the AWS managed CMK for DynamoDB in your account.

62. ASG 디테일

- You can only specify one launch configuration for an EC2 Auto Scaling group at a time, and you can't modify a launch configuration after you've created it.

- Data is not automatically copied from existing instances to new instances. You can use lifecycle hooks to copy the data

- EC2 Auto Scaling groups are regional constructs. They can span Availability Zones, but not AWS regions.

-If you have an EC2 Auto Scaling group (ASG) with running instances and you choose to delete the ASG, the instances will be terminated and the ASG will be deleted

63. AWS Lambda Detail

1. By default, Lambda functions always operate from an AWS-owned VPC and hence have access to any public internet address or public AWS APIs. Once a Lambda function is VPC-enabled, it will need a route through a NAT gateway in a public subnet to access public resources - Lambda functions always operate from an AWS-owned VPC. By default, your function has the full ability to make network requests to any public internet address — this includes access to any of the public AWS APIs. For example, your function can interact with AWS DynamoDB APIs to PutItem or Query for records. You should only enable your functions for VPC access when you need to interact with a private resource located in a private subnet. An RDS instance is a good example.

Once your function is VPC-enabled, all network traffic from your function is subject to the routing rules of your VPC/Subnet. If your function needs to interact with a public resource, you will need a route through a NAT gateway in a public subnet.

2. Since Lambda functions can scale extremely quickly, its a good idea to deploy a CloudWatch Alarm that notifies your team when function metrics such as ConcurrentExecutions or Invocations exceeds the expected threshold - Since Lambda functions can scale extremely quickly, this means you should have controls in place to notify you when you have a spike in concurrency. A good idea is to deploy a CloudWatch Alarm that notifies your team when function metrics such as ConcurrentExecutions or Invocations exceeds your threshold. You should create an AWS Budget so you can monitor costs on a daily basis.

3. If you intend to reuse code in more than one Lambda function, you should consider creating a Lambda Layer for the reusable code - You can configure your Lambda function to pull in additional code and content in the form of layers. A layer is a ZIP archive that contains libraries, a custom runtime, or other dependencies. With layers, you can use libraries in your function without needing to include them in your deployment package. Layers let you keep your deployment package small, which makes development easier. A function can use up to 5 layers at a time.

You can create layers, or use layers published by AWS and other AWS customers. Layers support resource-based policies for granting layer usage permissions to specific AWS accounts, AWS Organizations, or all accounts. The total unzipped size of the function and all layers can't exceed the unzipped deployment package size limit of 250 MB.

4. Lambda allocates compute power in proportion to the memory you allocate to your function. This means you can over-provision memory to run your functions faster and potentially reduce your costs. However, AWS recommends that you should not over provision your function time out settings. Always understand your code performance and set a function time out accordingly. Overprovisioning function timeout often results in Lambda functions running longer than expected and unexpected costs.

5. 잘못된 명제

The bigger your deployment package, the slower your Lambda function will cold-start. Hence, AWS suggests packaging dependencies as a separate package from the actual Lambda package - This statement is incorrect and acts as a distractor. All the dependencies are also packaged into the single Lambda deployment package.

64. API Gateway, SQS, Amazon Kinesis

- API Gateway는 throttle가능(using the token bucket algorithm, where a token counts for a request. Specifically, API Gateway sets a limit on a steady-state rate and a burst of request submissions against all APIs in your account. In the token bucket algorithm, the burst is the maximum bucket size.)

- SQS는 buffer 가능

Amazon Simple Queue Service (SQS) is a fully managed message queuing service that enables you to decouple and scale microservices, distributed systems, and serverless applications. Amazon SQS offers buffer capabilities to smooth out temporary volume spikes without losing messages or increasing latency.

- Amazon Kinesis - Amazon Kinesis is a fully managed, scalable service that can ingest, buffer, and process streaming data in real-time.

65. Redis, Redis Auth

Amazon ElastiCache for Redis is also HIPAA Eligible Service.

IAM Auth is not supported by ElastiCache.Redis authentication tokens enable Redis to require a token (password) before allowing clients to execute commands, thereby improving data security.

66. KMS Encryption

AWS Key Management Service (KMS) makes it easy for you to create and manage cryptographic keys and control their use across a wide range of AWS services and in your applications. AWS KMS is a secure and resilient service that uses hardware security modules that have been validated under FIPS 140-2. KMS does not support username and password for enabling encryption.

67. S3 Glacier Deep Archive, S3 Glacier

S3 Glacier Deep Archive

: is up to 75% less expensive than S3 Glacier

: provides retrieval within 12 hours using the Standard retrieval speed.

: You may also reduce retrieval costs by selecting Bulk retrieval, which will return data within 48 hours.

68. IPsec VPN connection (site-to-site VPN)

이걸 연결 하려면 AWS VPN쪽엔 Virtual Private Gateway 를 설치해야 하고, on-premises VPN쪽엔 Customer Gateway를 설치해야 한다.

The following are the key concepts for a site-to-site VPN:

Virtual private gateway: A Virtual Private Gateway (also known as a VPN Gateway) is the endpoint on the AWS VPC side of your VPN connection.

VPN connection: A secure connection between your on-premises equipment and your VPCs.

VPN tunnel: An encrypted link where data can pass from the customer network to or from AWS.

Customer Gateway: An AWS resource that provides information to AWS about your Customer Gateway device.

Customer Gateway device: A physical device or software application on the customer side of the Site-to-Site VPN connection.

69. Redshift Spectrum

s3에 있는 데이터 따로 load하지 않고 효율적으로 사용 가능.

Amazon Redshift is a fully-managed petabyte-scale cloud-based data warehouse product designed for large scale data set storage and analysis.

Using Amazon Redshift Spectrum, you can efficiently query and retrieve structured and semistructured data from files in Amazon S3 without having to load the data into Amazon Redshift tables. Amazon Redshift Spectrum resides on dedicated Amazon Redshift servers that are independent of your cluster. Redshift Spectrum pushes many compute-intensive tasks, such as predicate filtering and aggregation, down to the Redshift Spectrum layer. Thus, Redshift Spectrum queries use much less of your cluster's processing capacity than other queries.

70. Amazon Neptune

Amazon Neptune is a fast, reliable, fully managed graph database service that makes it easy to build and run applications that work with highly connected datasets. The core of Amazon Neptune is a purpose-built, high-performance graph database engine optimized for storing billions of relationships and querying the graph with milliseconds latency. Neptune powers graph use cases such as recommendation engines, fraud detection, knowledge graphs, drug discovery, and network security.

Amazon Neptune is highly available, with read replicas, point-in-time recovery, continuous backup to Amazon S3, and replication across Availability Zones. Neptune is secure with support for HTTPS encrypted client connections and encryption at rest. Neptune is fully managed, so you no longer need to worry about database management tasks such as hardware provisioning, software patching, setup, configuration, or backups.

Amazon Neptune can quickly and easily process large sets of user-profiles and interactions to build social networking applications. Neptune enables highly interactive graph queries with high throughput to bring social features into your applications. For example, if you are building a social feed into your application, you can use Neptune to provide results that prioritize showing your users the latest updates from their family, from friends whose updates they ‘Like,’ and from friends who live close to them.

71. Global Accelerator vs CloudFront

: Global Accelerator is a good fit for non-HTTP use cases, such as gaming (UDP), IoT (MQTT), or Voice over IP. Therefore, this option is correct.

: CloudFront supports HTTP/RTMP protocol based requests

72. NLB target

상황: multiple instances in a public subnet and specified these instance IDs as the targets for the NLB

정답: Traffic is routed to instances using the primary private IP address specified in the primary network interface for the instance

설명:

If you specify targets using an instance ID, traffic is routed to instances using the primary private IP address specified in the primary network interface for the instance. The load balancer rewrites the destination IP address from the data packet before forwarding it to the target instance.

If you specify targets using IP addresses, you can route traffic to an instance using any private IP address from one or more network interfaces. This enables multiple applications on an instance to use the same port. Note that each network interface can have its security group. The load balancer rewrites the destination IP address before forwarding it to the target.

73. Direct Connect

Direct Connect involves significant monetary investment and takes more than a month to set up

74. org내에서 다른 계정 resource에 접근해야 할 때.

Create a new IAM role with the required permissions to access the resources in the production environment. The users can then assume this IAM role while accessing the resources from the production environment

74. Dedicated Host vs Dedicated Instance

문제 상황: The company has multiple long-term server bound licenses across the application stack and the CTO wants to continue to utilize those licenses while moving to AWS.

정답:

Use EC2 dedicated hosts

You can use Dedicated Hosts to launch Amazon EC2 instances on physical servers that are dedicated for your use. Dedicated Hosts give you additional visibility and control over how instances are placed on a physical server, and you can reliably use the same physical server over time. As a result, Dedicated Hosts enable you to use your existing server-bound software licenses like Windows Server and address corporate compliance and regulatory requirements.

Incorrect options:

Use EC2 dedicated instances - Dedicated instances are Amazon EC2 instances that run in a VPC on hardware that's dedicated to a single customer. Your dedicated instances are physically isolated at the host hardware level from instances that belong to other AWS accounts. Dedicated instances may share hardware with other instances from the same AWS account that are not dedicated instances. Dedicated instances cannot be used for existing server-bound software licenses.

75. DocumentDB

Amazon DocumentDB is a fast, scalable, highly available, and fully managed document database service that supports MongoDB workloads. As a document database, Amazon DocumentDB makes it easy to store, query, and index JSON data. DocumentDB is not an in-memory database, so this option is incorrect.

76. AWS Database Migration Service(RDS에 있는 데이터를 Redshift로 옮겨야 할 때)

AWS Database Migration Service helps you migrate databases to AWS quickly and securely. The source database remains fully operational during the migration, minimizing downtime to applications that rely on the database. With AWS Database Migration Service, you can continuously replicate your data with high availability and consolidate databases into a petabyte-scale data warehouse by streaming data to Amazon Redshift and Amazon S3.

You can migrate data to Amazon Redshift databases using AWS Database Migration Service. Amazon Redshift is a fully managed, petabyte-scale data warehouse service in the cloud. With an Amazon Redshift database as a target, you can migrate data from all of the other supported source databases.

The Amazon Redshift cluster must be in the same AWS account and the same AWS Region as the replication instance. During a database migration to Amazon Redshift, AWS DMS first moves data to an Amazon S3 bucket. When the files reside in an Amazon S3 bucket, AWS DMS then transfers them to the proper tables in the Amazon Redshift data warehouse. AWS DMS creates the S3 bucket in the same AWS Region as the Amazon Redshift database. The AWS DMS replication instance must be located in that same region.

77. user data

user data로 boot time에 dynamic installation을 customize할 수 있다.

78. Amazon FSx for Lustre

Amazon FSx for Lustre makes it easy and cost-effective to launch and run the world’s most popular high-performance file system. It is used for workloads such as machine learning, high-performance computing (HPC), video processing, and financial modeling. The open-source Lustre file system is designed for applications that require fast storage – where you want your storage to keep up with your compute. FSx for Lustre integrates with Amazon S3, making it easy to process data sets with the Lustre file system. When linked to an S3 bucket, an FSx for Lustre file system transparently presents S3 objects as files and allows you to write changed data back to S3.

FSx for Lustre provides the ability to both process the 'hot data' in a parallel and distributed fashion as well as easily store the 'cold data' on Amazon S3. Therefore this option is the BEST fit for the given problem statement.

79. Amazon FSx for Windows File Server

- Amazon FSx for Windows File Server provides fully managed, highly reliable file storage that is accessible over the industry-standard Service Message Block (SMB) protocol. It is built on Windows Server, delivering a wide range of administrative features such as user quotas, end-user file restore, and Microsoft Active Directory (AD) integration. FSx for Windows does not allow you to present S3 objects as files and does not allow you to write changed data back to S3. Therefore you cannot reference the "cold data" with quick access for reads and updates at low cost. Hence this option is not correct.

80. VPC sharing

- org내에서 다른 계정과 vpc를 쉐어할 때 사용(centrally-managed가능).

- vpc 주인이 하나 이상의 subnet을 유저들과 공유하면, 유저들은 view, create, modify, delete가능함.

- VPC자체를 공유하는 것이 아니라 vpc내의 subnet을 공유하는 것임!

VPC sharing (part of Resource Access Manager) allows multiple AWS accounts to create their application resources such as EC2 instances, RDS databases, Redshift clusters, and Lambda functions, into shared and centrally-managed Amazon Virtual Private Clouds (VPCs). To set this up, the account that owns the VPC (owner) shares one or more subnets with other accounts (participants) that belong to the same organization from AWS Organizations. After a subnet is shared, the participants can view, create, modify, and delete their application resources in the subnets shared with them. Participants cannot view, modify, or delete resources that belong to other participants or the VPC owner.

You can share Amazon VPCs to leverage the implicit routing within a VPC for applications that require a high degree of interconnectivity and are within the same trust boundaries. This reduces the number of VPCs that you create and manage while using separate accounts for billing and access control.

81. S3 bucket policy

- Account level과 User level 에 대한 통제를 하려면 bucket policy를 써야 한다.

- user단위만 하려면 iam policies를, Account단위만 통제하려면 ACLs를 쓰면 된다.

Bucket policies in Amazon S3 can be used to add or deny permissions across some or all of the objects within a single bucket. Policies can be attached to users, groups, or Amazon S3 buckets, enabling centralized management of permissions. With bucket policies, you can grant users within your AWS Account or other AWS Accounts access to your Amazon S3 resources.

You can further restrict access to specific resources based on certain conditions. For example, you can restrict access based on request time (Date Condition), whether the request was sent using SSL (Boolean Conditions), a requester’s IP address (IP Address Condition), or based on the requester's client application (String Conditions). To identify these conditions, you use policy keys.

Type of access control in S3:

Access Control Lists (ACLs) - Within Amazon S3, you can use ACLs to give read or write access on buckets or objects to groups of users. With ACLs, you can only grant other AWS accounts (not specific users) access to your Amazon S3 resources. So, this is not the right choice for the current requirement.

82. OpsWork

Chef, Puppet을 기억할 것.

AWS OpsWorks is a configuration management service that provides managed instances of Chef and Puppet. Chef and Puppet are automation platforms that allow you to use code to automate the configurations of your servers. OpsWorks lets you use Chef and Puppet to automate how servers are configured, deployed and managed across your Amazon EC2 instances or on-premises compute environments.

83. SQS detail

- By default, FIFO queues support up to 3,000 messages per second with batching, or up to 300 messages per second (300 send, receive, or delete operations per second) without batching.

- The name of a FIFO queue must end with the .fifo suffix.

- standard -> FIFO로 바꾸는 방법은 없다. 무조건 새로 다시 만들어야 함.

83. VPC endpoint

A VPC endpoint enables you to privately connect your VPC to supported AWS services and VPC endpoint services powered by AWS PrivateLink without requiring an internet gateway, NAT device, VPN connection, or AWS Direct Connect connection. Instances in your VPC do not require public IP addresses to communicate with resources in the service. Traffic between your VPC and the other service does not leave the Amazon network.

Endpoints are virtual devices. They are horizontally scaled, redundant, and highly available VPC components. They allow communication between instances in your VPC and services without imposing availability risks or bandwidth constraints on your network traffic.

There are two types of VPC endpoints: Interface Endpoints and Gateway Endpoints. An Interface Endpoint is an Elastic Network Interface with a private IP address from the IP address range of your subnet that serves as an entry point for traffic destined to a supported service.

A Gateway Endpoint is a gateway that you specify as a target for a route in your route table for traffic destined to a supported AWS service. The following AWS services are supported: Amazon S3 and DynamoDB.

You must remember that only these two services use a VPC gateway endpoint. The rest of the AWS services use VPC interface endpoints.

84. Cognito Authentication via Cognito User Pool

- 이 서비스를 Cloudfront에 바로 연결시킬 수는 없다.

- ALB에 연결시켜서 유저 인증을 할 수 있다.

참고

'aws' 카테고리의 다른 글

AWS solutions Architect Associate 시험 오답노트 5 (0) 2021.03.30 AWS Elastic Beanstalk 배포 방법 정리 (0) 2021.03.25 AWS solutions Architect Associate 시험 오답노트 3 (0) 2021.02.27 AWS solutions Architect Associate 시험 오답노트 2 (0) 2021.02.21 AWS EC2( Amazon Linux 2)에 git, miniconda 설치하기 (0) 2021.02.15